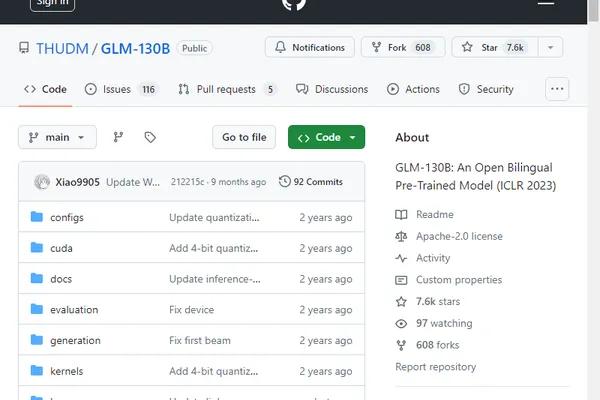

GLM-130B

GLM-130B is an open bilingual pre-trained model

December 29th, 2024

About GLM-130B

GLM-130B is a bilingual (English and Chinese) pre-trained language model with 130 billion parameters. It is designed to support inference tasks with the 130B parameters on a single server. The model uses two different mask tokens for short blank filling and left-to-right long text generation. GLM-130B is a continuation of the research on the General Language Model (GLM) and aims to provide a high-quality open-source model.

Key Features

- Bilingual (English and Chinese).

- 130 billion parameters.

- Supports inference tasks.

- Uses two different mask tokens.

Use Cases

- Text generation.

- Language understanding.

- Natural language processing tasks.

- Pre-trained model for downstream tasks.

Loading reviews...