Stanford Alpaca

An instruction-following LLaMA model

December 29th, 2024

About Stanford Alpaca

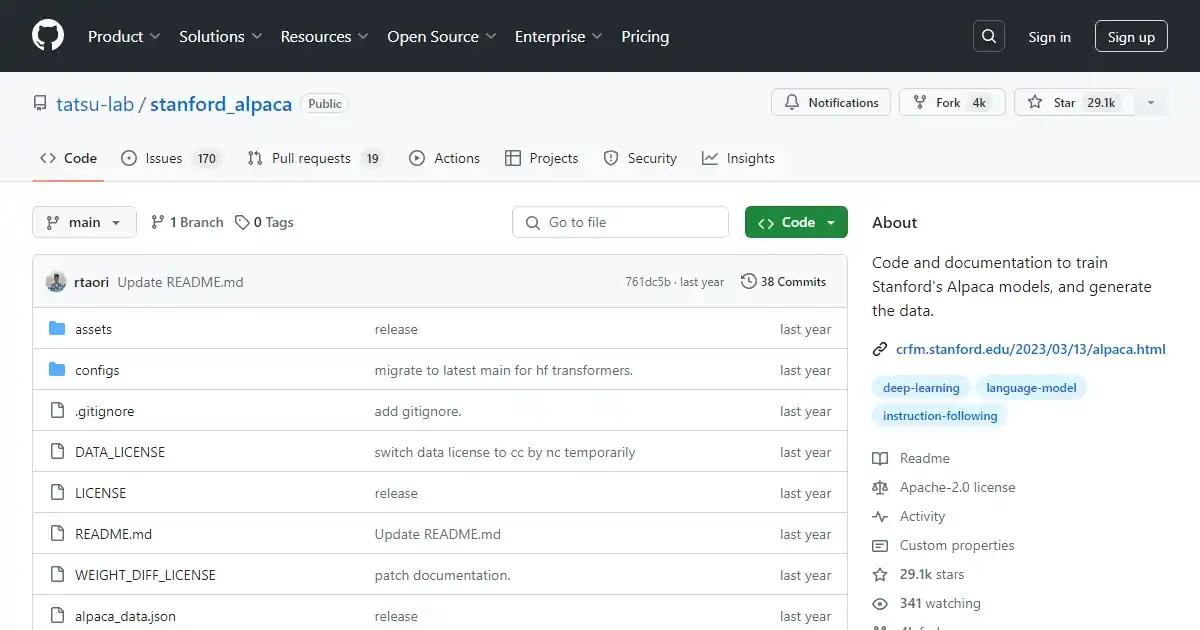

Stanford Alpaca is a project that aims to build and share an instruction-following LLaMA (Language Learning Model Archive) model. The project provides the code and data for training Stanford's Alpaca models and generating the necessary data. The model is based on fine-tuning with a large dataset of 52K examples and utilizes the power of GPT-4 based auto-annotators for evaluation.

Key Features

- Instruction-following LLaMA model.

- Code and data for model training.

- Generation of necessary training data.

- Utilizes GPT-4 based auto-annotators for evaluation.

Use Cases

- Natural language instruction understanding.

- Language learning research.

- Instruction-based applications.

Loading reviews...