UniLM

A new unified pre-trained Language Model (UniLM) for natural language understanding and generation tasks.

January 3rd, 2026

About UniLM

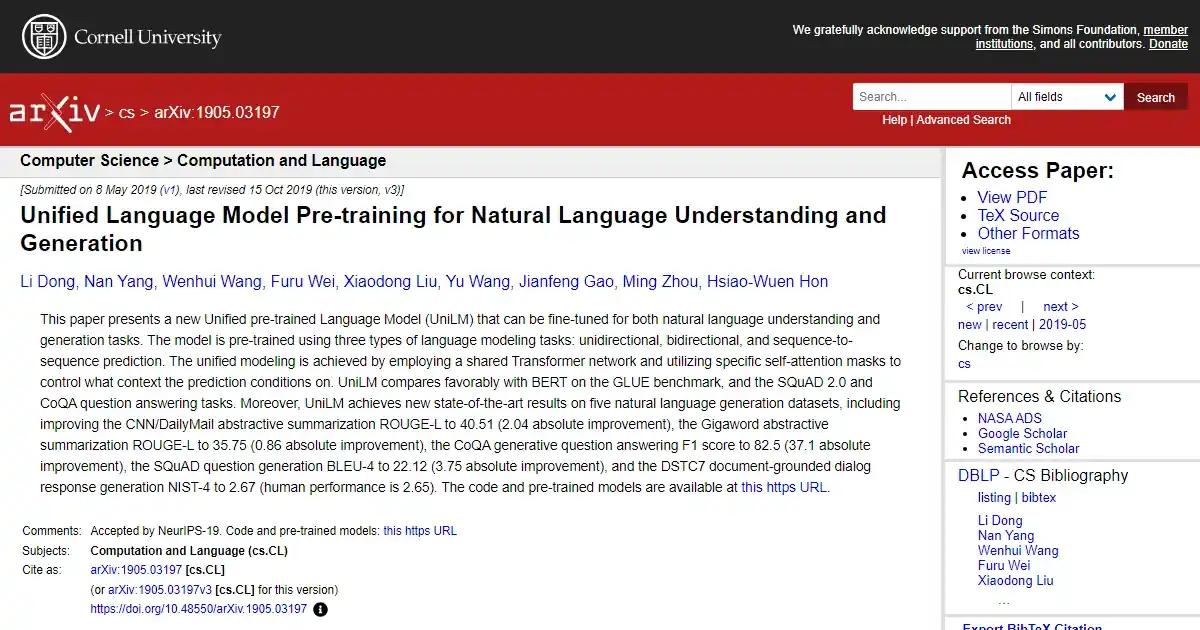

This paper presents a new Unified pre-trained Language Model (UniLM) that can be fine-tuned for both natural language understanding and generation tasks. The model is pre-trained using three types of language modeling tasks: unidirectional, bidirectional, and sequence-to-sequence prediction. The unified modeling is achieved by employing a shared Transformer network and utilizing specific self-attention heads for different types of language modeling tasks. The UniLM achieves state-of-the-art performance on various natural language understanding and generation benchmarks.

Key Features

- Unified pre-trained Language Model.

- Fine-tuning for natural language understanding and generation tasks.

- Pre-training using unidirectional

- bidirectional

- and sequence-to-sequence prediction.

- Shared Transformer network.

Use Cases

- Natural language understanding.

- Natural language generation.

Loading reviews...