OPT - Open Pre-trained Transformer Language Models

Open Pre-trained Transformer Language Models

December 29th, 2024

About OPT - Open Pre-trained Transformer Language Models

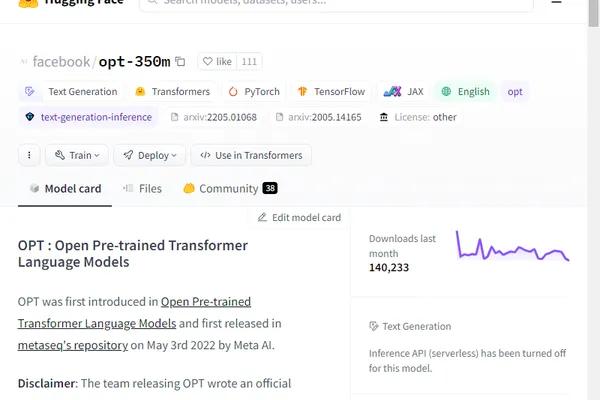

OPT (Open Pre-trained Transformer Language Models) is a language model developed and released by Meta AI. It is introduced in the Open Pre-trained Transformer Language Models project. OPT is built on the Transformer architecture and is trained on a large corpus of text data. The model is available from the Hugging Face model repository. Please refer to the official model card for detailed information on the model's capabilities and training methods.

Key Features

- Large pre-trained language model.

- Built on the Transformer architecture.

- Trained on a large corpus of text data.

- Available for fine-tuning and downstream tasks.

Use Cases

- Natural language understanding.

- Natural language generation.

- Question answering.

- Chatbot development.

Loading reviews...