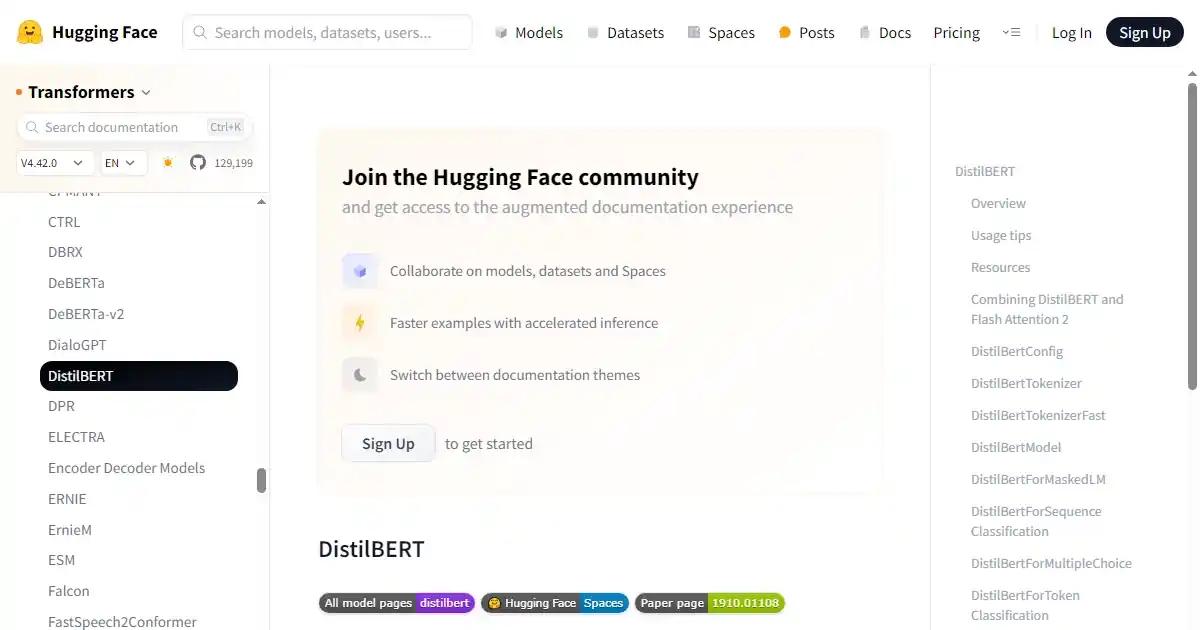

distillBert

DistilBert Model for extractive question-answering tasks

January 3rd, 2026

About distillBert

The Hugging Face Transformers library is a state-of-the-art machine learning library for PyTorch, TensorFlow, and JAX. It provides pre-trained models and tools to build, train, and deploy natural language processing models. The DistilBert model is a lightweight version of the original BERT model, designed to be faster and more memory-efficient while maintaining similar performance. It is particularly suited for extractive question-answering tasks like SQuAD, where it uses linear layers on top of the hidden-states output to compute span start logits and span end logits. The library is flexible and compatible with PyTorch, TensorFlow, and JAX, allowing developers to leverage their preferred framework. It supports fine-tuning of pre-trained models and custom model development.

Key Features

- DistilBert model for extractive question-answering tasks.

- Pre-trained models available for easy use.

- Compatibility with PyTorch

- TensorFlow

- and JAX.

- Support for fine-tuning and custom model development.

Use Cases

- Question-answering applications like SQuAD.

- Text classification.

- Named entity recognition.

- Text generation.

Loading reviews...