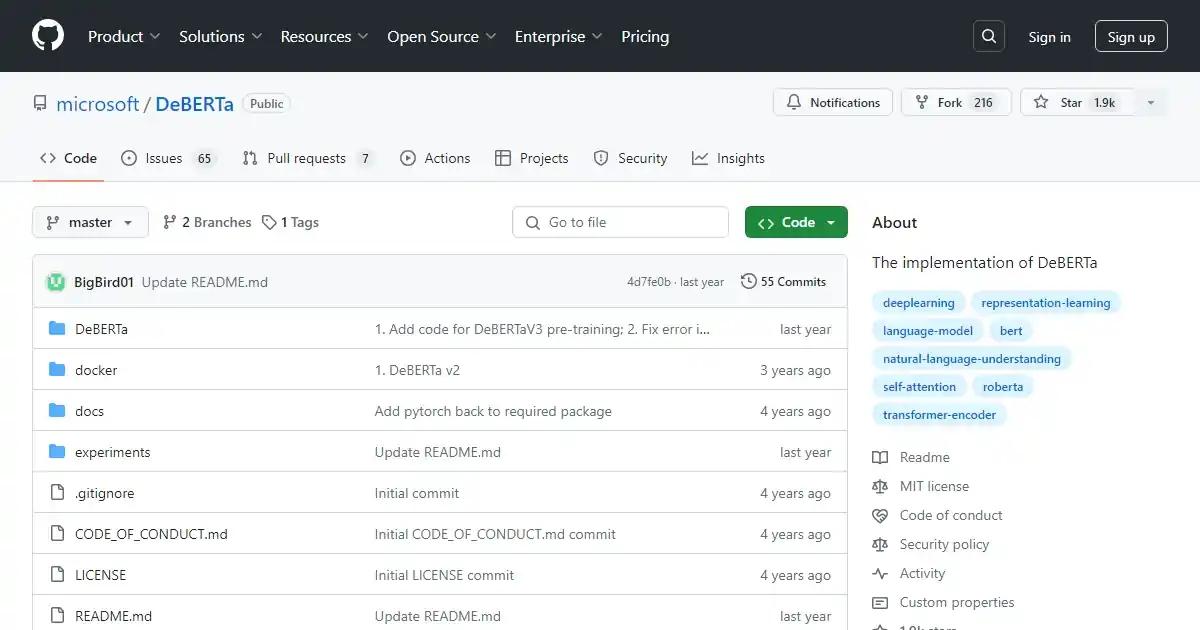

DeBERTa

DeBERTa: Decoding-enhanced BERT with Disentangled Attention.

December 29th, 2024

About DeBERTa

DeBERTa is a large-scale pre-trained language model developed by Microsoft. It improves upon the BERT and RoBERTa models by using disentangled attention and an enhanced mask decoder. With various versions available, DeBERTa has achieved impressive performance on NLU tasks and has surpassed human performance on the SuperGLUE benchmark. The latest version, DeBERTa-V3-XSmall, has shown superior performance with only 22M backbone parameters.

Key Features

- Disentangled attention for improved performance.

- Enhanced mask decoder for better results.

- Multiple versions available

- including DeBERTa-V3-XSmall.

- Large-scale pre-training with impressive results on NLU tasks.

Use Cases

- Natural language understanding tasks.

- Question answering.

- Text classification.

- Language generation and translation.

Loading reviews...