Chinchilla

Chinchilla is a compute-optimal model with 70B parameters.

December 29th, 2024

About Chinchilla

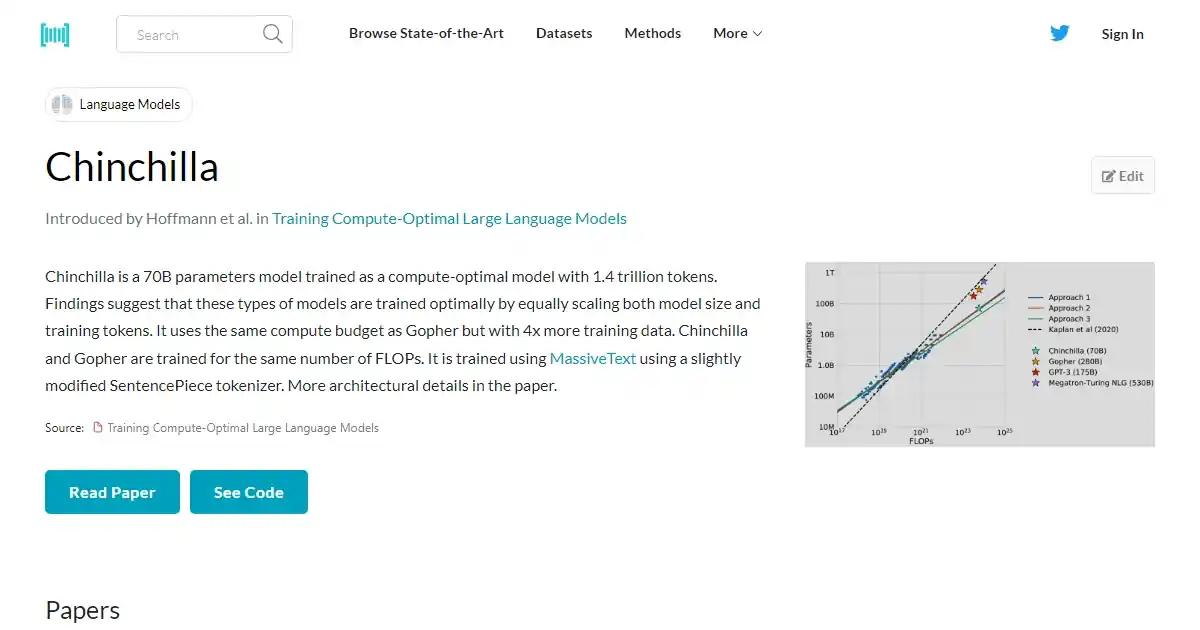

Chinchilla is a large-scale language model developed by DeepMind. It has been trained as a compute-optimal model with 1.4 trillion tokens and consists of 70 billion parameters. Chinchilla is designed to optimize both model size and training tokens, achieving optimal training efficiency. It has been shown to outperform previous models, including Gopher, on various benchmarks. Chinchilla is part of the ongoing advancements in language models.

Key Features

- Compute-optimal model with 70B parameters.

- Trained on 1.4 trillion tokens.

- Optimizes both model size and training tokens.

- Outperforms previous models on benchmarks.

Loading reviews...